What and Why?

Imagine building a service for hosting static websites, you start with a bunch of servers fronted by a load balancer that probably uses Round Robin. Let's say you were immensely lucky and your little service's traffic went over the roof, because Justin Bieber started using your service. So, your service starts to crash because the backend store your servers uses to serve content from, is getting overloaded, you certainly can't blame the beliebers for it? Caching will certainly help reduce the load on the database, so you added a local cache in every server. And to increase the hits you wisely started to use Consistent Hashing as a load balancing algorithm, after all it's what Memcached uses right? But soon after that you saw that while you got better cache hits, the load became skewed, and that there's an increased load on the servers that hosts Justin's site. There's still a way to have the best of both worlds, high cache hits and bounded server load. This is where Consistent Hashing With Bounded Loads comes into play.

Consistent Hashing With Bounded Loads Explained

It's a variation of Consistent Hashing

that guarantees an upper bound on the maximum load of every server (ceil((total_load/number_of_server)*scale_factor)) relative to the average total load of the cluster.

The algorithm works the same way as Consistent Hashing but differs in how it selects a server in order to assign work to it.

The step goes as follows:

- Hash the given item to its corresponding interval in the circle, same as Consistent Hashing.

- Check the load of the selected server to see if it's below the maximum allowed load.

- If the server is below the maximum load then assign the request to it and increment both the load of the server and the total load of the cluster.

- Otherwise, start walking the circle clockwise until we find a server whose load is below the maximum load.

In pseudo code:

// initialize a hash table to keep track of the current load of each server

loadTable := {"127.0.0.1": 0, "192.0.0.1": 0. "88.0.0.1": 0}

// a counter that track the total load of the whole cluster

totalLoad := 0

// a function to calculate the maximum load allowed per server

def maxLoad() int:

// scale_factor controls the amount of overhead each server can take

// in this implementation a server will incur 25% load more than

// the average load per server

scale_factor = 1.25

// the 1 added to the average is there to make sure that

// the current request will always be served by a server no matter what

return ((totalLoad/length(loadTable))+1)*scale_factor

// a function that return true if the given host is can serve the current request

// otherwise it returns false

def loadOK(hostName string) bool:

if loadTable[hostName] < maxLoad():

loadTable[hostName]++

totalLoad++

return true

else:

return false

Client Example

An example of the code used by the client to assign requests to servers, using liblb/consistent Go package, which implements the Consistent Hashing algorithm.

lb := bounded.New("127.0.0.1:8009", "127.0.0.1:8008", "127.0.0.1:8007")

for i := 0; i < 10; i++ {

host, err := lb.Balance("hello, world!")

if err != nil {

log.Fatal(err)

}

lb.Inc(host)

// a host will get an upper load of ceil(((total_load/number_of_server)+1)*scale_factor)

// which for this example, is ceil(((10/3)+1)*1.25) = 6

fmt.Printf("Send request #%d to host %s\n", i, host)

lb.Done(host)

}

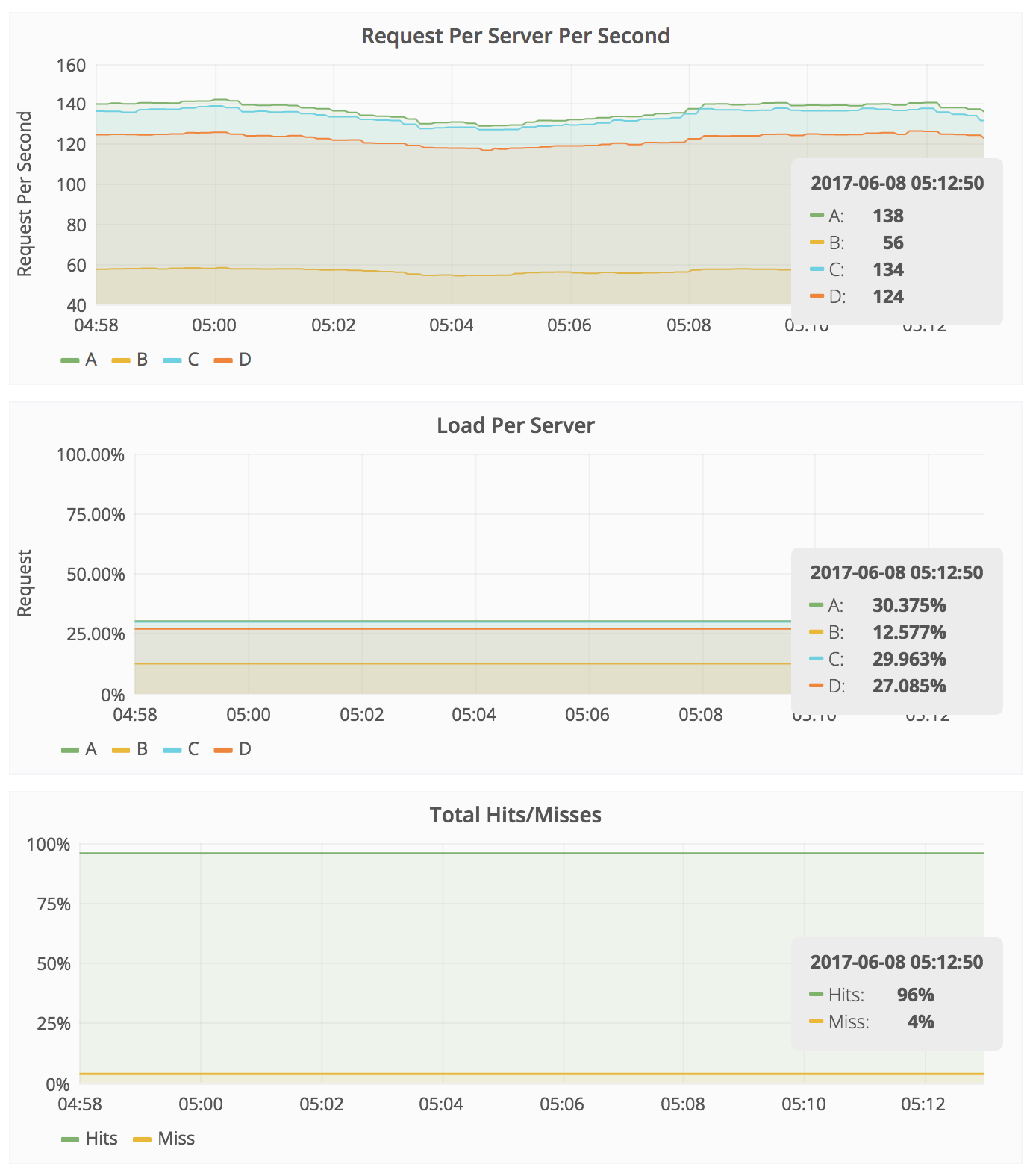

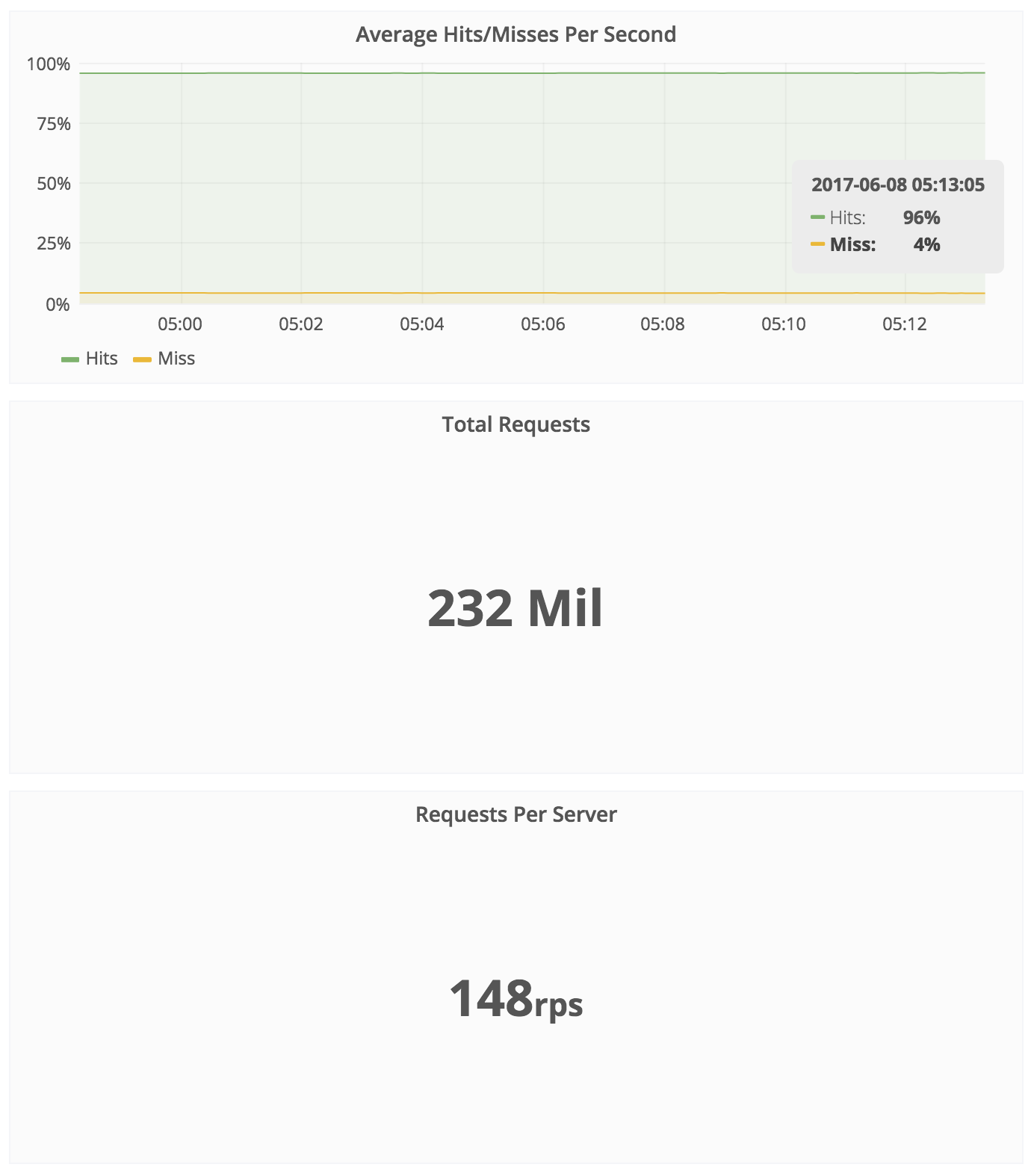

Experiment Results

Strengths

- When servers are not overloaded it works the same as Consistent Hashing, which means it'll keep the same key work to the same server until it gets overloaded.

- Adding or removing a host changes location of

1/(e^2)of the keys, whereeisscale_factor-1. In our examplescale_factoris 1.25 so the percentage of keys that change location is1/(0.25^2)resulting in 16% of the keys.

Weaknesses

- When servers are overloaded, it prioritize load over host consistency to achieve better load distribution. Making it not suitable for services that need to pick the same host at all times.

Use Cases

- Google are using it for their Google Cloud Pub/Sub service.

- Vimeo's SkyFire

- Works very well for caching systems, such as:

- Materlized Views of an Event Sourcing database.

- Anything that can compute its state from another source-of-truth system, which all instances have access to.